Bot Filtering for Ads - How to remove Invalid Traffic (IVT)

Written by Roy

Sep 26, 2020 • 11 min read

GoogleBot crawls over the internet 24/7. While doing so, it also loads all ads it finds on your pages. You don't want to count impressions or clicks made those bots, crawlers and spiders. Ad servers like AdGlare use bot filtering to remove invalid traffic from your reports.

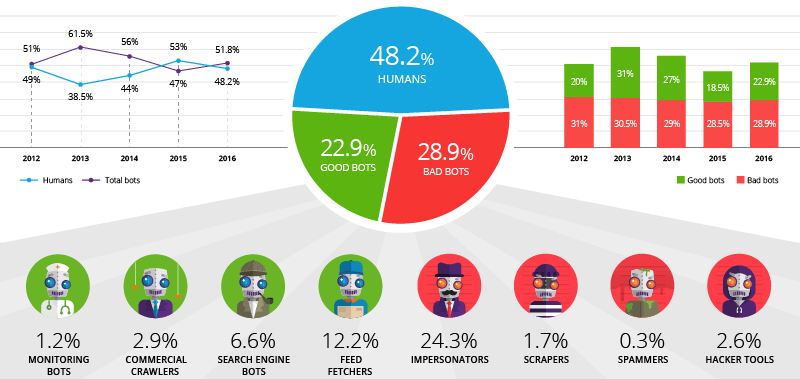

Bot traffic accounts for more than 50% of all global internet traffic. An incredible number that can't be neglected. Unless you're running in-house campaigns, it's imperative that your ad server filters bot traffic to avoid report discrepancies and to keep the quality of your sold inventory high.

Half of all internet traffic comes from Bots

Although bot activity fluctuates over the years, we can't deny the huge impact that bots and spiders have on our analytical data. Back in 2016, Incapsula released a great infographic to give us an update on where we're heading. Bots are accounting for 51.8% of all internet traffic.

Source: Incapsula

How does bot detection work?

Genuine bots and crawlers tell us who they are via the User-Agent string that is passed along with each HTTP request. This string will be matched against IAB's list of known bots and spiders to determine if we're dealing with human or non-human traffic. For example, Googlebot uses the following user agent string: Mozilla/5.0 (compatible; Googlebot/2.1; +http://www.google.com/bot.html)

The Media Rating Council (MRC) has set a standard for detection and filtering of invalid traffic. If the ad serving engines receive a request from such a user agent, an advertisement will be returned but the impression or click will simply not be logged. AdGlare uses this method to make sure page layout remains the same whether a bot or a human visits the page. This is important to let Google determine which content is above the fold - a significant factor in SEO.

What happens if I don't filter for bots?

In the online advertising industry, publishers are getting paid to show ads. Advertisers buy inventory to show ads to attract potential consumers, which are humans, not bots or automated scripts. As most inventory is sold on a CPM basis, it doesn't make sense to serve half of the campaign to non-human traffic. If you don't filter for bots:

It's therefore common practice for advertisers to insist on filtering bots when closing a deal with a publisher or ad network.

The Complete Bot List of 2024

The Interactive Adertising Bureau (IAB) maintains a list of all known bots and spiders. Ad Tech companies like AdGlare can subscribe to this list to make sure we're all filtering the same type of bots. We're filtering for the following: 360Spider ADMantX AHrefs Bot Aboundexbot Acoon AddThiscom Alexa Crawler Amorank Spider Analytics SEO Crawler ApacheBench Applebot Archiveorg Bot Ask Jeeves BLEXBot Crawler BUbiNG BacklinkCheckde BacklinkCrawler Baidu Spider BazQux Reader BingBot BitlyBot Blekkobot Bloglovin Blogtrottr Bountii Bot Browsershots Butterfly Robot CSS Certificate Spider CareerBot Castro 2 Catchpoint Cc Cc Bot CcBot Crawler Charlotte Cliqzbot CloudFlare AMP Fetcher CloudFlare Always Online Collectd CommaFeed Datadog Agent Dataprovider Daum Dazoobot Discobot Domain ReAnimator Bot DotBot DuckDuckGo Bot EMail Exractor Easou Spider EmailWolf Evcbatch ExaBot ExactSeek Crawler Ezooms Facebook External Hit Feed Wrangler FeedBurner Feedbin Feedly Feedspot Fever Findxbot Flipboard Generic Bot Generic Bot Genieo Web Filter Gigablast Gigabot Gluten Free Crawler Gmail Image Proxy Goo Google PageSpeed Insights Google Partner Monitoring Google Structured Data Testing Tool Googlebot Grapeshot HTTPMon Heritrix Heureka Feed HubPages HubSpot ICCCrawler IIS Site Analysis IPGuide Crawler IPS Agent Ichiro Inktomi Slurp Kouio LTX71 Larbin Web Crawler Lets Encrypt Validation Lighthouse Linkdex Bot LinkedIn Bot Lycos MJ12 Bot MagpieCrawler MagpieRSS MailRu Bot Masscan Meanpath Bot MetaInspector MetaJobBot Mixrank Bot Mnogosearch MojeekBot MonitorUs Munin NLCrawler Nagios Checkhttp NalezenCzBot NetEstate NetLyzer FastProbe NetResearchServer Netcraft Survey Bot Netvibes NewsBlur NewsGator Nmap Nutchbased Bot Octopus Omgili Bot OpenLinkProfiler OpenWebSpider Openindex Spider Orange Bot Outbrain PHP Server Monitor PagePeeker PaperLiBot Phantomas Picsearch Bot Pingdom Bot Pinterest PocketParser Pompos PritTorrent QuerySeekerSpider Qwantify ROI Hunter Rainmeter RamblerMail Image Proxy Reddit Bot Riddler Rogerbot SEOENGBot SEOkicksRobot SISTRIX Crawler SSL Labs SafeDNSBot Scooter ScoutJet Scrapy Screaming Frog SEO Spider ScreenerBot Semrush Bot Sensika Bot Sentry Bot Seoscannersnet Server Density Seznam Bot Seznam Email Proxy Seznam Zbozicz ShopAlike ShopWiki SilverReader SimplePie Site24x7 Website Monitoring SiteSucker Sixych Skype URI Preview Slackbot Sogou Spider Soso Spider Sparkler Speedy Spinn3r Sputnik Bot Sqlmap StatusCake Superfeedr Bot Survey Bot TLSProbe Tarmot Gezgin TelgramBot TinEye Crawler Tiny Tiny RSS Trendiction Bot TurnitinBot TweetedTimes Bot Tweetmeme Bot Twitterbot URLAppendBot UkrNet Mail Proxy UniversalFeedParser Uptime Robot Uptimebot Vagabondo Visual Site Mapper Crawler W3C CSS Validator W3C I18N Checker W3C Link Checker W3C Markup Validation Service W3C MobileOK Checker W3C Unified Validator Wappalyzer WeSEESearch WebSitePulse WebThumbnail WebbCrawler Willow Internet Crawler WordPress Wotbox YaCy Yahoo Cache System Yahoo Gemini Yahoo Link Preview Yahoo Slurp Yandex Bot YetiNaverbot Yottaa Site Monitor Youdao Bot Yourls Yunyun Bot Zao Zgrab Zookabot ZumBot How to enable Bot Filtering in AdGlare

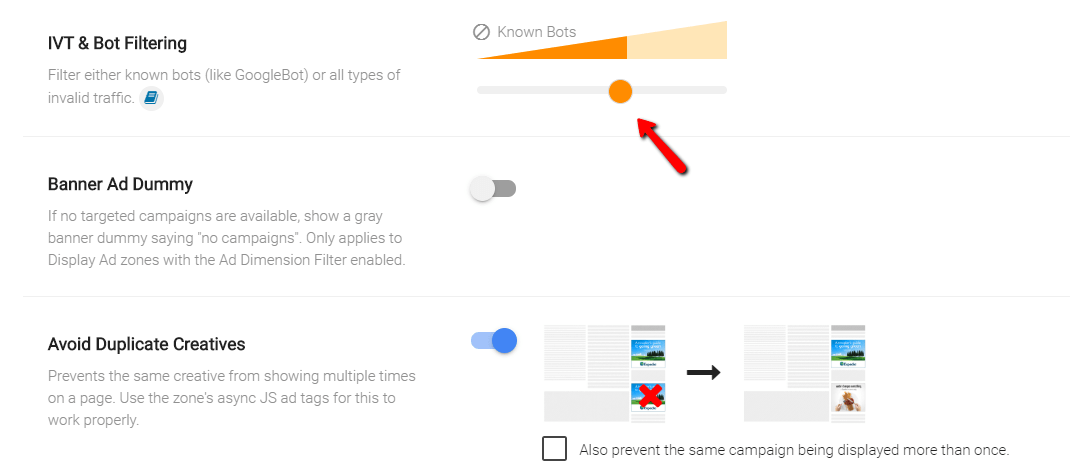

It's highly recommended to enable bot filtering to minimize discrepancies with third-party ad servers, especially if you're a publisher. To do so, follow these steps:

1

Click Settings and then Global Settings

2

Click the Engine Nodes tab

3

Enable IVT & Bot Filtering

Filtering IP addresses from Malicious Networks

In addition to filtering invalid traffic from bots, you may also want to consider to filter requests made from known malicious networks. A quick search on Google can provide you with a list of IP addresses (likely CIDR notations) from networks known to be infected with software to automatically crawl pages to artificially inflate impressions. AdGlare can filter those impressions and clicks at two levels:

Note that IP filtering works slightly differently than described above. Instead of returning an ad, the engines will simply respond with 'no ads available' for requests made from those IP ranges. The end result is the same: these impressions and clicks are not logged whatsoever, keeping your statistical reports free of bot traffic.

More ways to increase inventory quality

Now you're on track to improve your CTR and the quality of your inventory, it's absolutely worth it to consider the following practices as well.

•••

Download this article as PDF?

No time to read the whole article? Download a free PDF version for later (no email required): Permalink

To link to this article, please use: External Resources |